In today’s hyper-connected world, social media has become a stage where we present our best selves. Scroll through any feed, and you’ll see holidays, achievements, smiling faces, and exciting plans. I note a post from Mark Anderson recently in relation to the upcoming BETT conference, referring to all the countdowns, grand claims and other positive posts currently being shared. Rarely do we encounter the mundane, the struggles, or the moments of doubt. This curated reality, while aesthetically pleasing, comes with a hidden cost: the rise of imposter syndrome and other feelings of needing to do more than is possible or having not done enough.

Imposter syndrome is that nagging feeling that we don’t measure up, that everyone else is doing better, living fuller lives, and achieving more. One look at social media on the lead up to the BETT conference could easily leave you feeling like a BETTposter.Ironically, many of us who feel this way also contribute to the cycle by posting positive content ourselves, myself included. I find myself suffering from imposter, or BETTposter, syndrome, however I also often share positive posts as to plans, achievements or ideas, including posting as to speaking at BETT. Why? Because that’s what people like, or perhaps more accurately, that’s what the algorithm likes. And in a world where visibility equals opportunity, we often feel compelled to play by those rules. It is through engagement in social media in the past that I have connected with so many amazing educators and technologists, with these social media initiated conversations often continuing into the real world. I certainly wouldn’t want to miss out on these opportunities, including possible future opportunities, through either not posting, or posting things which others wont like whether this due to the algorithms involved or not.

So, what’s the solution? In relation to BETT and social media, its about accepting that what is presented on social media is carefully curated and therefore presents a particular view or lens on things, often tilted towards the positive. The reality will, in most cases, fall short of this.In terms of the event itself, it is simply the case of accepting there will be more people there than you can possibly meet, more stands to see than is physically possible and more talks to listen to than can be logistically achieved, outside a bit of time travel or having multiple clones of yourself. So the perfect is not achievable leaving us to do what ever we “reasonably” can. This means go with a plan in terms of what talks to attend, what stands to visit and who you want to catch up with, leaving time to travel around the event, time for rest and lunch, etc, and time for the accidental or unintended meetings you had never planned but which add so much to your thinking. But with this plan also accept that no plans survives contact with BETT (or maybe the enemy!) and therefore there will be talks you planned to see but missed, or people you wanted to meet but never got round to or where you did meet but didn’t capture the all important selfie.

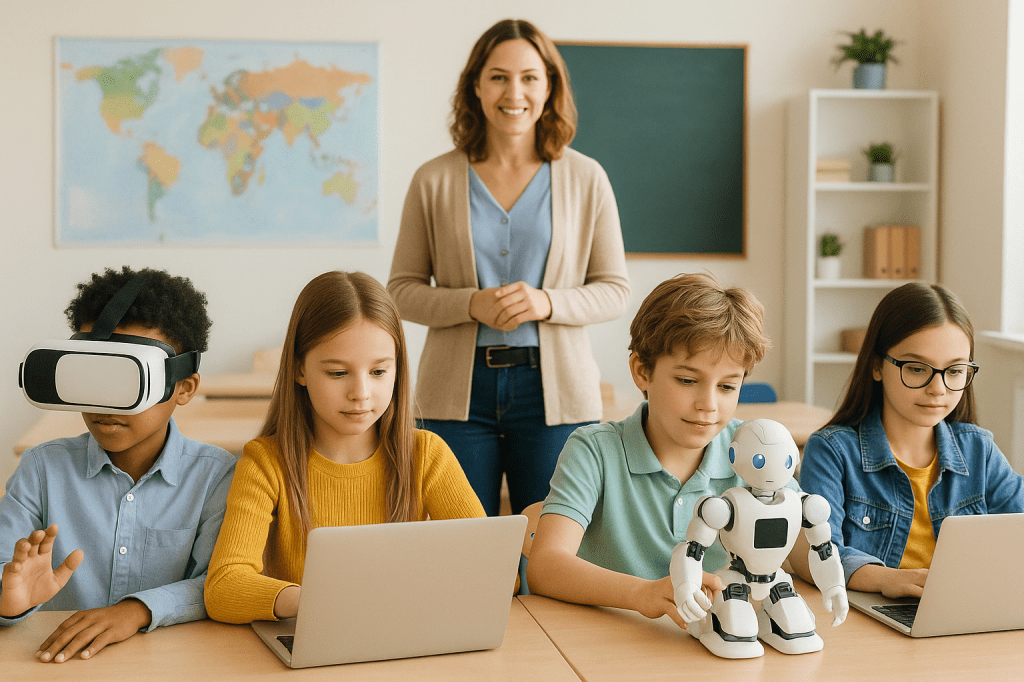

Working in education though, the above poses an interesting question in terms of how we support our students to thrive within this world, maximising the potential for social networks to help them develop networks and opportunities, while minimising the risk of unrealistic expectations and imposter syndrome. My thinking is that this challenge isn’t easy to address, however what we can do is at least get started. Every discussion of this is likely to represent at least some forward and positive movement. And so I leave you with the above and the ask that you consider how and where these conversation might occur in your school. For those attending BETT, enjoy and I look forward to hopefully seeing you there.